TL;DR

Cache Hit Ratio reflects the percentage of requests served from the cache versus total requests. A higher ratio means better caching efficiency, speeding up content delivery and reducing server load. To boost the cache hit ratio, optimize cache policies with suitable expiration times, use cache-control headers, and prioritize caching for static assets. Employ Content Delivery Networks (CDNs) for wider cache distribution.

Optimizing your website’s performance without a solid caching policy in place is like trying to build a house on quicksand.

You can use the best materials and tools, but when your foundation isn’t rock solid, you will always feel like something hinders your progress.

Your caching policy is the backbone of your website’s exceptional web performance. If you have a proper caching system, you can drastically improve your site’s speed.

Conversely, without a good working caching setup, your visitors’ requests will lead to cache misses, which in turn will slow down your page load time.

And cache miss is just one of the topics that will be covered in the following lines.

Let’s begin!

Test NitroPack yourself

A Brief Introduction to Caching

Before delving into the world of cache hits and misses, it’s essential to understand how caching works, what purpose it serves, and why it’s considered the best speed optimization strategy.

To understand caching, we need to take a step back and follow the whole process that takes part behind the scenes when a visitor requests your site.

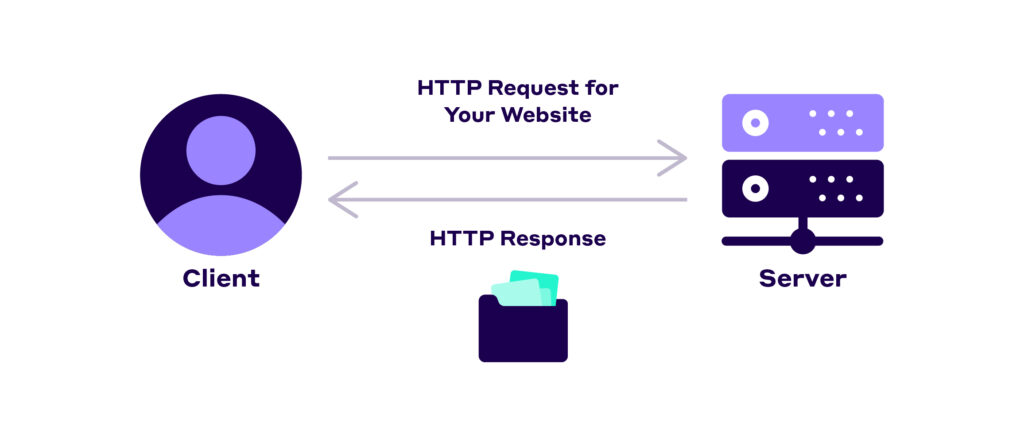

Your website is made of HTML documents, JavaScript and CSS files, images, etc. They are all stored on your origin server. When someone wants to visit your website, their browser sends a request to your origin server, which in turn sends back a response with all the necessary files.

When you have 10 visitors who simultaneously request your site’s files, there shouldn’t be a problem, and your server can handle them pretty easily.

However, this model doesn’t work when 100,000 visitors want to visit your website.

Servers have a limit on how many requests they can handle simultaneously, and every request after that limit goes into a queue, resulting in longer loading times for your visitors.

That’s where caching comes in.

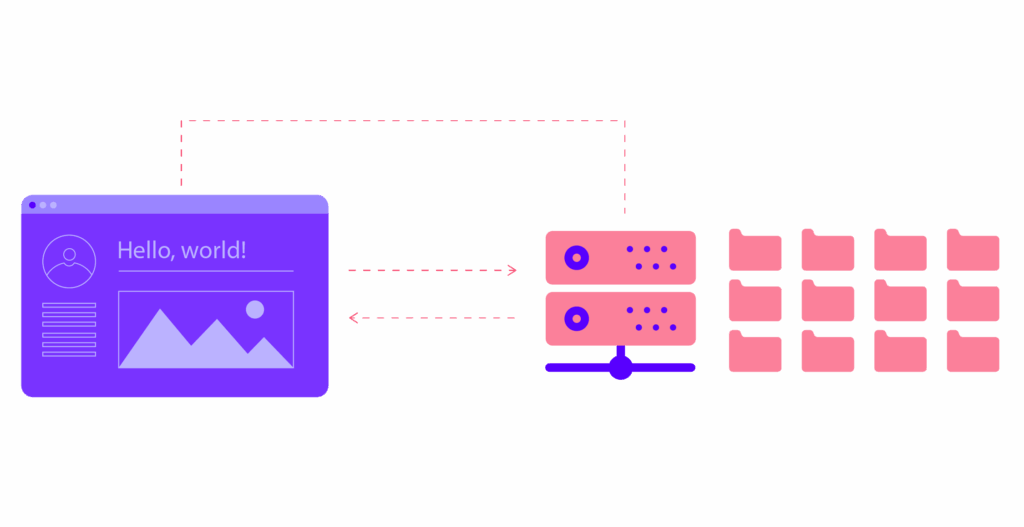

In a nutshell, caching is the process of saving a copy of your site data (HTML, CSS, JS, images, etc.) to a different location called web cache.

The web cache plays the role of an intermediary between users and your origin. That way, their requests are served from the cache and not retrieved from your server.

As a result, your pages load way faster.

You can find numerous types of caching classifications. But for the purpose of this article, and to understand when cache hits and misses occur, we will take a glimpse at the two most popular types – browser and proxy caching.

Browser caching

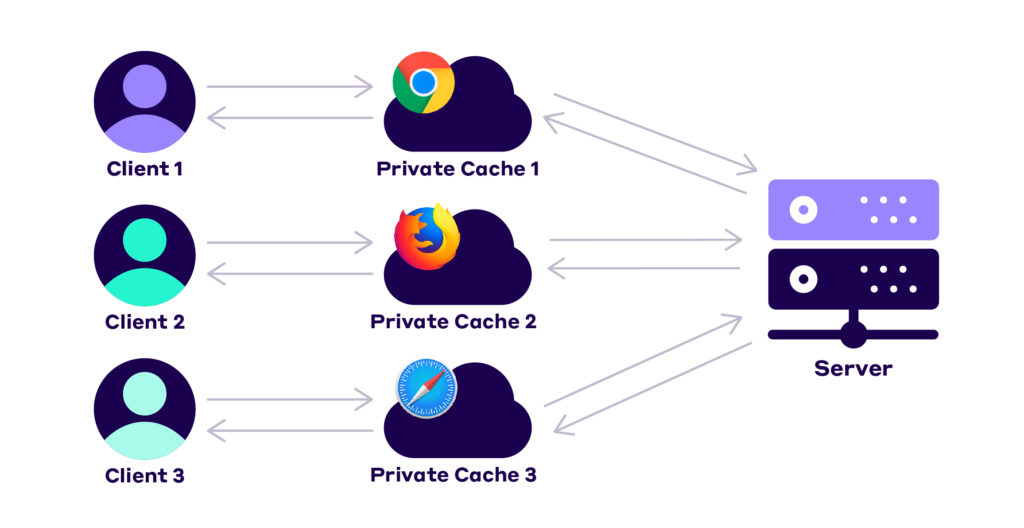

Each browser has its own caching policy, and it can store data locally on a user’s computer.

That’s useful because it makes loading a previously visited page blazing fast. In fact, browser caching is the main reason why the back and forward buttons can work their magic.

Unfortunately, browser caching is limited to operating per device. But its biggest advantage is that it can save entire network requests, which makes it a must-have.

Proxy server caching

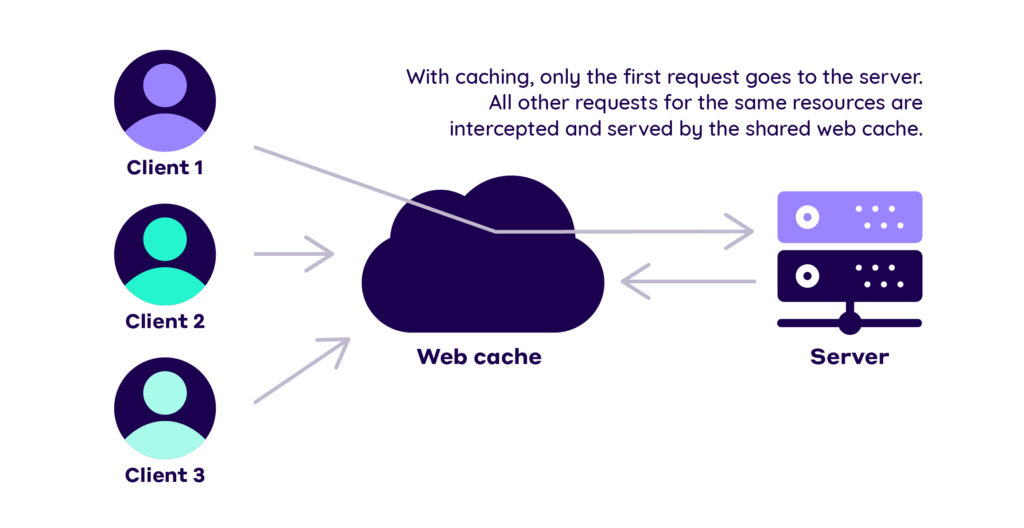

Proxy caching helps alleviate the load on the server and deliver relatively faster content to end-users. The best-case scenario is when proxy caching works hand-in-hand with browser caching.

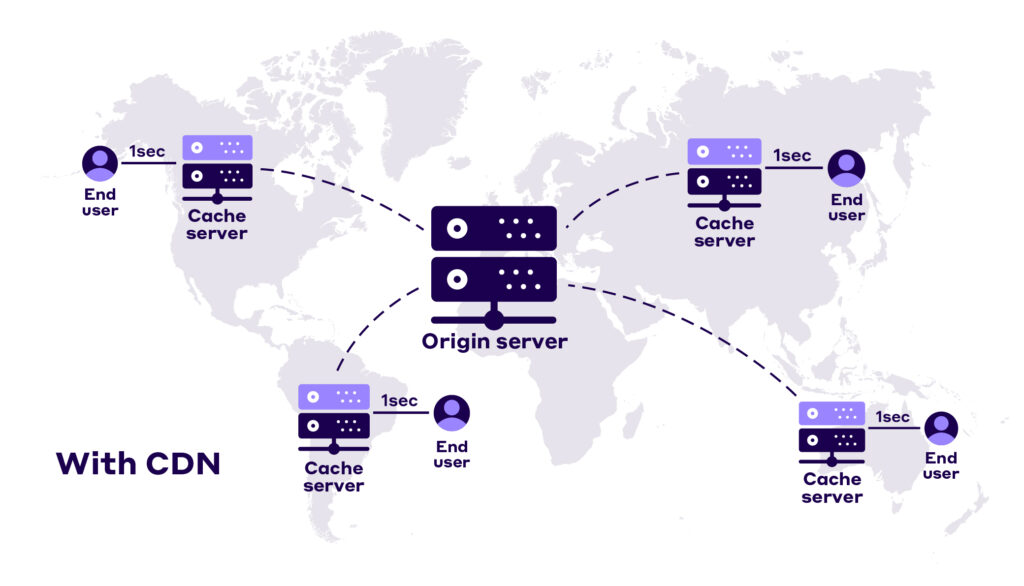

Proxy servers are distributed all over the world. They are usually maintained by CDN (Content Delivery Network) providers.

Proxies are intermediaries between the user and your origin server. You can use them to cache content on different locations, and that way, your content will be closer to the users, reducing latency and network traffic:

Unlike browser caching, proxy server caching isn’t limited per device, and it can simultaneously serve content to multiple users.

That’s the gist of caching. It’s a nuanced topic, and there is much more to be unfolded – advantages and disadvantages of the different caching types, how to set up caching rules, and more.

But that’s not the focus of this article.

If you want to dive deeper into the topic, you can check our article – Web Caching 101: Beginner’s Guide To HTTP Caching (Examples, Tips and Strategies)

Or watch our YouTube video:

For now, it’s important to remember that If the requested data is not found in your web cache, that’s when delays and issues start to occur. This brings us to a cache miss.

What is a Cache Miss?

A cache miss occurs when a system, application, or browser requests to retrieve data from the cache, but that specific data could not be currently found in the cache memory.

When a cache miss occurs, the request gets forwarded to the origin server.

Once the data is retrieved from the origin, it is then copied and stored into the cache memory in anticipation of similar future requests for that same data.

There are multiple reasons for a cache miss to occur.

For instance, the specific data was never cached in the first place.

Let’s say that you have an eCommerce site and you’ve just added a new product page. All the images, HTML, CSS, and JavaScript files have never been added to the cache memory as no one has ever requested them. That means that your first visitor will have to send a request to your origin in order to load the page. After the first request, the data will be transferred to the cache and served from it.

Another possibility for cache miss is that the cached data was removed at some point.

Again, there are several things that might have led to that scenario – more space was needed, an application requested the removal, or the Time to Live policy on the data expired.

We will touch more on Time to Live later in the article.

Whatever the reason might be, the truth is that every cache miss leads to longer latency, slow load times, bad user experience, and unsatisfied visitors.

However, I don’t want to leave you with the impression that you need to achieve cache hits 100% of the time. That’s not realistic.

What’s more, there are some cases where cache misses are necessary, as you might need to ensure that the content you serve is always up-to-date.

For instance, a news website like bbc.com can change its home page content several times throughout the day as breaking news needs to be reported. In this case, a cache hit means that their readers didn’t see the latest version of their website, and respectively – they missed the latest news.

So there is an upside to the occurrence of a cache miss.

However, if you don’t have a news website and don’t change your content regularly, you should aim to keep cache misses as low as possible, and cache hits as high as possible.

What is a Cache Hit?

A cache hit occurs when the requested data is successfully served from the cache.

For instance, if a user visits one of your product pages that’s supposed to display an image of the product you sell, their browser will send a request for this image to the cache (browser or CDN). If the CDN has a copy of the image in its storage, the request results in a cache hit, and the image is sent back to the browser.

What is a Cache Hit Ratio and How to Calculate It?

Cache hit ratio measures how many requests a cache has delivered successfully from its storage, compared to how many requests it received in total.

A high cache hit ratio means that most of your users’ requests have been fulfilled by the cache, which in turn means that they experienced faster load times.

You can calculate your cache hit ratio using this formula:

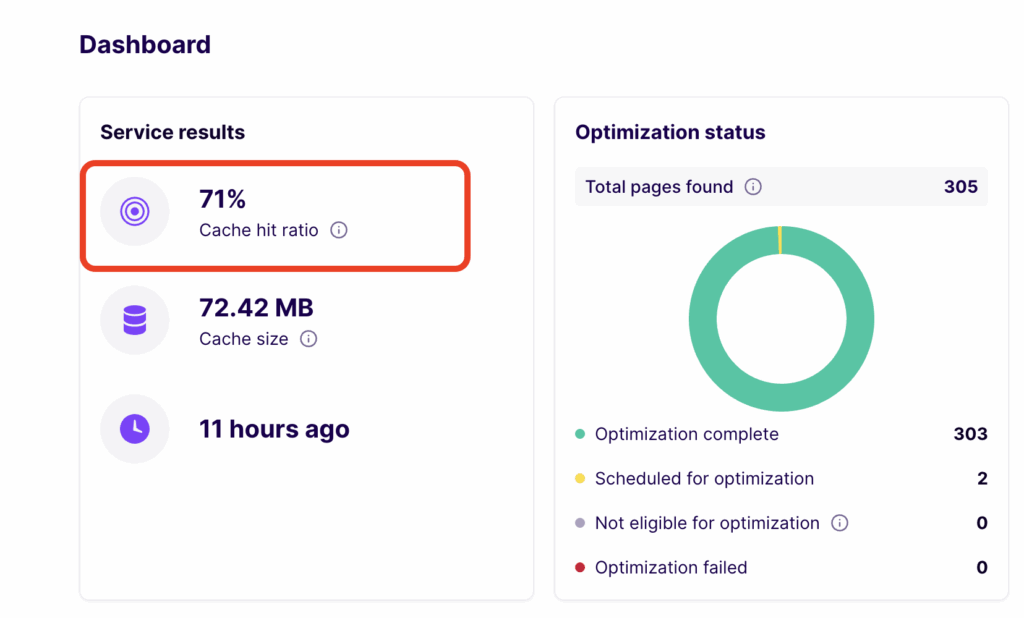

However, that might not be needed as most CDN providers calculate this for you.

NitroPack’s dashboard display this information as well:

What is a Good Cache Hit Ratio?

As a general rule of thumb – a cache hit ratio of 80% and higher is a good result as it means that most of the requests are served from the cache.

Anything below 80% on static websites indicates an inefficient caching policy.

According to Cloudflare, one of the biggest CDN providers in the world:

“A typical website that’s mostly made up of static content could easily have a cache hit ratio in the 95-99% range.”

The global Cache Hit Ratio for NitroPack is 90%. And roughly 70% of all NitroPack users experience a Cache Hit Ratio of 80% or higher.

How to Increase Cache Hit Ratio?

You can take specific steps to reduce the number of cache misses and hence increase your cache hit ratio.

1. Set up caching rules based on your website’s needs

The cache-control header allows you to set a myriad of different caching rules in order to optimize your content’s serving.

Some of the rules include:

- no-store tells web caches not to store any version of the resource under any circumstances;

- no-cache tells the web cache that it must validate the cached content with the origin server before serving it to users;

- max-age sets the maximum amount of time (in seconds) that the cache can keep the saved resource before re-downloading it or revalidating it with the origin server. After that, the content is marked as stale;

- s-maxage does the exact same thing as max-age but only for proxy caches;

- private tells the web caches that only private caches can store the response;

- public marks the response as public. Any intermediate caches can store responses marked with this instruction;

You should set a Time to Live (how long the cache will hold onto your data before it retrieves updated information from the origin) that best fits your content.

For instance, if an asset changes approximately every two months, a max-age header of 50 days may be appropriate. However, if the asset is changed on a daily basis, you may want to use a no-cache header.

You can find more information on cache control headers, cache freshness, and validation in our YouTube video:

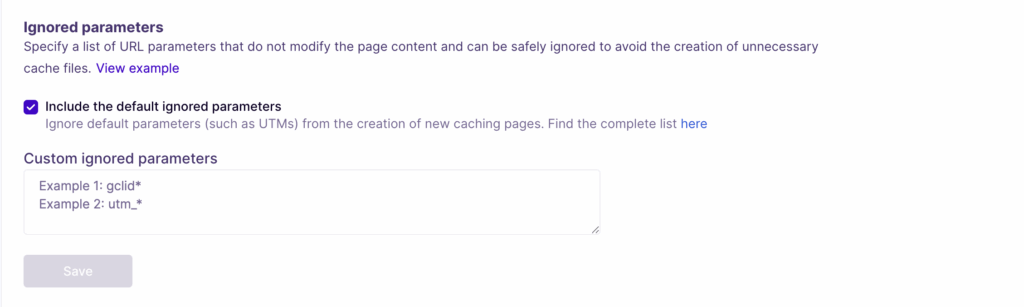

2. Ignore UTM parameters

Running multiple ad campaigns (e.g., Facebook ads, Google ads, etc.) generates different URLs with specific UTM parameters.

As a result, you have a single page with multiple variations (different UTM parameters) that need to be optimized.

That is a problem as each URL variation is considered to be a unique object, and every request will be directed to the origin server.

Accordingly, each request will be classified as a cache miss, even though the requested content was available in the web cache.

This leads to an unnecessarily lower cache hit ratio.

NitroPack – The Easiest Way to Achieve Excellent Cache Hit Ratio

After everything we’ve covered, the whole process of achieving a high cache hit ratio might sound a bit overwhelming.

The good news is that if you are working with a CDN provider, most of the stuff I mentioned, like cach-control headers, might be already taken care of for you.

For everything else, you can use a caching plugin.

For instance, NitroPack comes with out-of-the-box features that guarantee our users’ high cache hit ratio. This includes:

- Cache Warmup will simulate organic visits to your website, which automatically leads to NitroPack preparing optimized (cached) versions of your website for desktop and mobile devices.

- Cache Invalidation marks the cached content as “stale” but keeps serving it until newly optimized content is available. As a result, your visitors always see optimized content, even though it may be outdated for a short while.

- Built-in CDN, which eliminates the need for you to sync your CDN with your caching plugin of choice. Often that is time-consuming, inconvenient, and it may cause unexpected issues.

- An Ignored Parameter option that allows NitroPack to ignore some commonly used UTM (and other) parameters by default so that you don’t need to worry when running your marketing campaigns.

But you don’t have to take my word for it. Test NitroPack for free and see its impact for yourself.